|

Let us consider the linear homogeneous differential equation

of order  . If the coefficient functions . If the coefficient functions  are continuous and the coefficient are continuous and the coefficient  of the highest order derivative does not vanish on a certain interval (resp. a domain in of the highest order derivative does not vanish on a certain interval (resp. a domain in

), then all solutions ), then all solutions  are continuous on this interval (resp. domain). If all coefficients have the continuous derivatives up to a certain order, the same concerns the solutions. are continuous on this interval (resp. domain). If all coefficients have the continuous derivatives up to a certain order, the same concerns the solutions.

If, instead,  vanishes in a point vanishes in a point  , this point is in general a singular point. After dividing the differential equation by , this point is in general a singular point. After dividing the differential equation by  and then getting the form and then getting the form

some new coefficients  are discontinuous in the singular point. However, if the discontinuity is restricted so, that the products

are continuous, and even analytic in are discontinuous in the singular point. However, if the discontinuity is restricted so, that the products

are continuous, and even analytic in  , the point , the point  is a regular singular point of the differential equation. is a regular singular point of the differential equation.

We introduce the so-called Frobenius method for finding solution functions in a neighbourhood of the regular singular point  , confining us to the case of a second order differential equation. When we use the quotient forms , confining us to the case of a second order differential equation. When we use the quotient forms

where  , ,  and and  are analytic in a neighbourhood of are analytic in a neighbourhood of  and and

, our differential equation reads , our differential equation reads

|

(1) |

Since a simple change

of variable brings to the case that the singular point is the origin, we may suppose such a starting situation. Thus we can study the equation of variable brings to the case that the singular point is the origin, we may suppose such a starting situation. Thus we can study the equation

|

(2) |

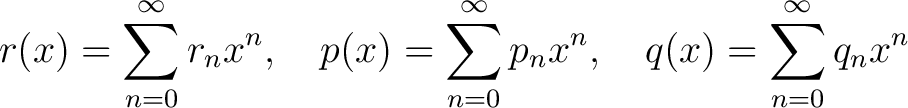

where the coefficients have the converging power series expansions

|

(3) |

and

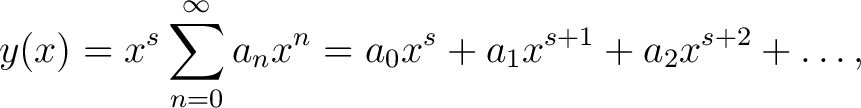

In the Frobenius method one examines whether the equation (2) allows a series solution of the form

|

(4) |

where  is a constant and is a constant and

. .

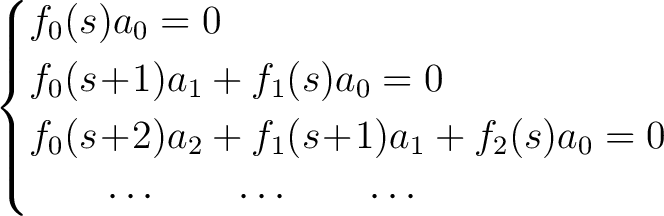

Substituting (3) and (4) to the differential equation (2) converts the left hand side to

Our equation seems clearer when using the notations

: :

![$\displaystyle f_0(s)a_0x^s+[f_0(s\!+\!1)a_1+f_1(s)a_0]x^{s+1}+[f_0(s\!+\!2)a_2+f_1(s\!+\!1)a_1+f_2(s)a_0]x^{s+2}+\ldots = 0$ $\displaystyle f_0(s)a_0x^s+[f_0(s\!+\!1)a_1+f_1(s)a_0]x^{s+1}+[f_0(s\!+\!2)a_2+f_1(s\!+\!1)a_1+f_2(s)a_0]x^{s+2}+\ldots = 0$](http://images.physicslibrary.org/cache/objects/665/l2h/img34.png) |

(5) |

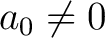

Thus the condition of satisfying the differential equation by (4) is the infinite system of equations

|

(6) |

In the first place, since

, the indicial equation , the indicial equation

|

(7) |

must be satisfied. Because

, this quadratic equation determines for , this quadratic equation determines for  two values, which in special case may coincide. two values, which in special case may coincide.

The first of the equations (6) leaves

arbitrary. The next linear equations in arbitrary. The next linear equations in  allow to solve successively the constants allow to solve successively the constants

provided that the first coefficients provided that the first coefficients

, ,

do not vanish; this is evidently the case when the roots of the indicial equation don't differ by an integer (e.g. when the roots are complex conjugates or when do not vanish; this is evidently the case when the roots of the indicial equation don't differ by an integer (e.g. when the roots are complex conjugates or when  is the root having greater real part). In any case, one obtains at least for one of the roots of the indicial equation the definite values of the coefficients is the root having greater real part). In any case, one obtains at least for one of the roots of the indicial equation the definite values of the coefficients  in the series (4). It is not hard to show that then this series converges in a neighbourhood of the origin. in the series (4). It is not hard to show that then this series converges in a neighbourhood of the origin.

For obtaining the complete solution of the differential equation (2) it suffices to have only one solution  of the form (4), because another solution of the form (4), because another solution  , linearly independent on , linearly independent on  , is gotten via mere integrations; then it is possible in the cases , is gotten via mere integrations; then it is possible in the cases

that that  has no expansion of the form (4). has no expansion of the form (4).

- 1

- PENTTI LAASONEN: Matemaattisia erikoisfunktioita. Handout No. 261. Teknillisen Korkeakoulun Ylioppilaskunta; Otaniemi, Finland (1969).

|